The initial development/conceptual model validation work follow the design as shown. It requires changes to Dataverse and the DVUploader.

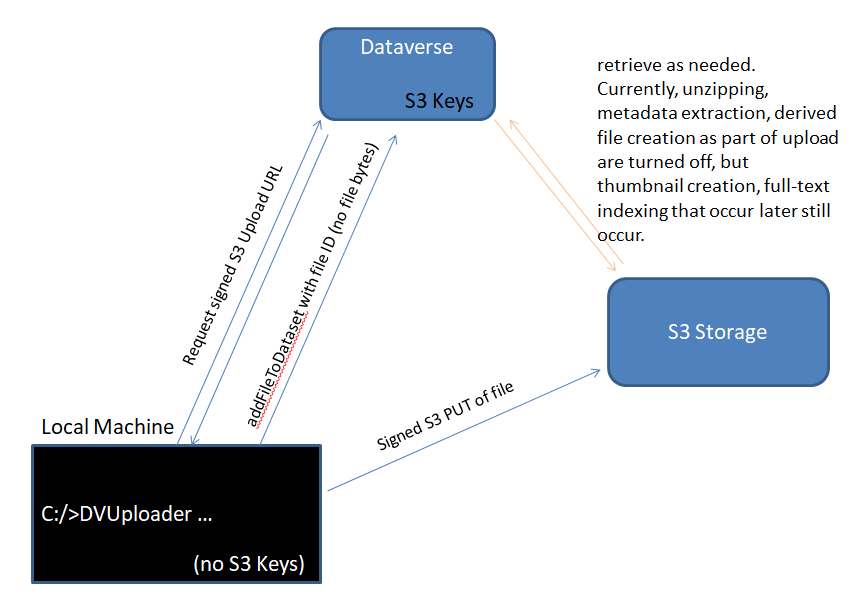

From their local machine (where the data resides as files) user runs the DVUploader.

DVuploader scans the directories/files specified (as it normally does) and, for each file, requests a pre-signed upload URL from Dataverse to upload that file for a given Dataset.

Dataverse, using the secret keys for its configured S3 storage, creates a short-lived URL that allows upload of one new file directly to the storage area in S3 specified for the Dataset.

DVUploader uses the URL to do an HTTP PUT of the data directly to S3 (avoiding streaming the data through glassfish and to a temporary file on the Dataverse server) with transfer speed governed by the network speed between the local machine and S3 store (not the bandwidth to/from the Dataverse server or the disk read/write speed at Dataverse).

DVUploader calls the existing Dataverse /api/datasets/{dataset id}/add call but, instead of sending the file bytes, it sends the ID of the file as stored in S3 (along with it’s name, mimetype, and MD5 hash (and any directoryLabel(path) that would normally be sent).

Dataverse runs through its normal steps to add the file and it’s metadata to the Dataset, currently skipping steps that would require access to the file bytes (e.g. unzipping the file, inspecting it to infer a better mimetype, extracting metadata, creating derived files, etc.). The net result for a file that would not trigger such special processing is exactly the same as if the file had been uploaded via the web interface through Dataverse.

The work so far shows that it is possible to upload data directly from a local machine to an S3 store without going through Glassfish, using temporary local storage at Dataverse, or using the network between the local machine and Dataverse, or Dataverse and the S3 store. Performance testing needs to be done but from previous testing that shows Glassfish and/or the temporary local storage add delays/server load, etc. , should make uploads faster. If the network between the data and S3 store is faster (e.g. the data is local to the S3 store), additional performance enhancement would be expected.

The POC also shows that this design works with both Amazon’s S3 implementation and the Minio S3 implementation (which is in use at TACC). (There are minor differences that are handled in the Dataverse and DVUploader software).

The design itself was intended to allow direct upload without creating a security concern that a user could upload/edit/delete other files in S3. Unlike designs in which the S3 keys used by Dataverse, or derivative keys doe a specific user, would have to be sent to the user’s machine, where they could potentially be misused or stolen, this design sends a presigned URL that only allows a PUT HTTP call to upload one file, with the location/id of that file specified by Dataverse. (The S3 keys at Dataverse are used to create a cryptographic signature that is included as a parameter in the URL. That signature can be used by the S3 implementation to verify that the PUT, for this specific file, was authorized by Dataverse. Any change to try reading/deleting/editing this or any other file would invalidate the signature.) The signature is also set to be valid for a relatively short time (configurable, default is 60 minutes), further limiting opportunities for misuse. (Note that using the Dataverse API requires having the user’s Access Key (generated via the Dataverse GUI). That key allows the user to do anything via the API call that they can do via the Dataverse GUI. For the discussion here, the important point is that this access key, which is already required for using the DVUploader with the standard upload mechanism, is more powerful/more important to keep safe than the presigned URLs added by the new design. (FWIW: There are discussions at IQSS/GDCC about how to provide more limited API keys from Dataverse that would mimic the presigned URL mechanism.))

In addition to validating the design, the POC involved working through Dataverse’s 2 phase, ~10 step upload process and learning how to separate and, for now, turn off, steps that involve reading the file itself while keeping the processing to add the file to the dataset, record it’s metadata, create a new dataset version if needed, etc. While this code will probably need further modification/clean-up, it’s a significant step to have the POC working.

The POC now (as of Dec. 5th) supports the configuration of multiple file stores for a Dataverse instance. Implementing this involved code changes in classes related to file/s3/swift access and other places where the storage location of a dataset is interpreted, and requires changing some glassfish java properties but does not affect Dataverse’s database structure and can be made backward compatible for current data.

In stock Dataverse today, the storageidentifier field for files includes a prefix representing the type of file store used (e.g. “s3://<long random id number>” indicating s3 storage) and the code assumed that all files were in the same store (because Dataverse assumed only one file path, one set of s3 or swift credentials, etc.). In the update, the storageidentifier has a prefix indicating a store identifier, such as s3:// or s3tacc:// and Dataverse looks at properties associated with that store to understand it’s type (file, s3, or swift) and any type-specific options for that store. With these changes, one can have a file1:// and file2:// stores sending files into different paths/file systems, or s3:// and s3tacc:// stores sending data to different s3 instances with different credential, etc. Together, these changes allow a modified instance to access data from multiple places at once.

To decide where new data should be sent, the POC code includes a default location and, as of Jan. 2020, allows superusers to specify a ‘storage driver’, selected from a drop-down list, for a given dataverse that will be used for all datasets within that Dataverse. For example, a Dataverse with “TACC” as a storage driver would have files stored in the s3tacc:// store. (“TACC” is the label specified in the configuration for the s3tacc store - arbitrary strings can be used.)

There is additional functionality that will be important to creating a production capability. Some are a ‘simple matter of programming’, where the functionality needed is probably not controversial, while others may need further requirements/design discussion.

For testing, it will be useful to set up a proxy server on dev (S3 uploads from clients and Dataverse would go to the proxy and the proxy would forward to TACC). Per Chris@TACC, this should allow uploads from any IP addresses we want without TACC having to alter their firewall settings. To test the maximum performance we would need to avoid the proxy (which wouldn’t be used in production). It’s a question for TDL as to which proxy software to use (I found TinyProxy but have no preference).

Status 12/19: Plan to use TinyProxy, not yet done. Since the upload URL feature signs the unproxied URL, some code changes will be needed in Dataverse to allow a proxy with direct uploads.

Status 1/3/20: Nginx set up in Dec. Configuration adjusted to use /ebs file system for caching uploads to allow testing of larger files. Using the proxy requires allowing a given IP address. One additional step is required to test upload via the web interface: the user must navigate to the proxy address first and accept the self-signed certificate. Once this is done, the browser will allow the background (ajax) uploads to proceed. Also - nginx does not restart when the machine is rebooted, so nginx needs to be checked/started for testing.

While the API to add a file to a Dataset checks whether the user (as identified by their access token) has permission to add a file to the specified Dataset, the api call to retrieve a presigned S3 key currently only checks that the user is a valid Dataverse user. It should deny the request unless the user has permission to add files to the dataset. (This is trivial to do, but until then, a valid dataverse user could add files to S3 that would not be associated with any Dataverse entries.)

Status 12/19: Fixed. Code will only return an upload URL if the user has permission to add files to the dataset.

Dataverse was originally designed to use one (configurable) store for files (could be a local file system, S3, Swift, etc.). The POC works with Dataverse configured with S3. However, as is, all files must be in the same store. To support sending some files to a different store, Dataverse will need to be modified to work with multiple stores. This is potentially useful in general, e.g. to support sending new data to a new store without having to move existing files, but, for the remote storage case, if the use case is to send only some files to the new store (specific datasets, only files larger than a cut-off size, as decided by an admin/user based on preference or knowledge of where the data initially exists, etc.), then additional work would be needed to implement that policy. Dataverse does already keep track of the store used for a given dataset, so some of the code required to identify which store a file is in already exists.

Status 12/19: Implemented - see Multiple Store section above for details.

To support upload via the Dataverse web interface, additional work will be needed. This could be significant in that the current Dataverse upload is managed via a third-party library and it may be difficult to replace just the upload step without impacting other aspects of the current upload process (e.g. showing previews, allowing editing of file names and metadata, providing warnings if/when files have the same content or colliding names.) If this is too complex, it will possible to create an alternate upload tab - Dataverse already provides a mechanism to add alternate upload mechanisms that has been used to support uploads from Dropbox, rsync, etc.

Status 12/19: I’ve started investigating the upload process in the web interface and ways to turn off the automatic direct upload to glassfish and to be able to trigger an alternate process to instead request upload URLs and perform direct uploads. So far, it still looks like it will be possible to do this without changing the upload user interface in any way visible to the user.

Status 1/3/20: Have implemented direct upload through the standard Dataverse upload interface. The method used is dynamically configured based on the choice for the current Dataverse. Have verified multiple sequential uses of the ‘Select Files To Add’ button as well as selecting multiple files at once. Have also tested files to 39 GB. Error handling, file cleanup on cancel, and some style updates to the progress bar tbd.

Depending on whether the normal processing that Dataverse does during upload are desirable for large files, additional work will be needed to reinstate those steps. These include:

thumbnails - shown during upload and in the dataset file list,

metadata extraction - currently limited to tabular files (DDI metadata) and FITS (astronomy, currently broken)

mimetype analysis - Dataverse can use what the client sends, check the file extension, and/or, in some cases, look at the file contents to determine mimetype. My sense is that most files will get a reasonable mimetype without having their content inspected. FWIW – most content inspection relies on the first few bytes of a file so it’s possible this could be done without retrieving the whole file.

Derived file creating - currently limited to deriving .tab files, which are viewable in tworavens, data explorer, from spreadsheet files

Unzipping - Dataverse automatically expands a top-level zip file into component files and stores those

Future - possibilities such as virus checks, etc.

There is also functionality that touches the files that can be triggered at other times. In the POC, these are still enabled. These include:

full-text indexing - if enabled, if under the configured size limit, whenever indexing occurs including after any dataset change or when triggered by an admin/cron job.

thumbnails - I think Dataverse tries to create these if they don’t exist whenever the data is displayed. I have not yet checked to see if it is ‘smart’ - only reads the file if its a type for which previews can be created.

previews - for any file types for which a previewer is registered. Some previewers, such as the video viewer, are smart - for video it plays as the file streams so a preview would download the whole file unless a user watched the who video. However, most would try to download the full file. There isn’t a mechanism now that would limit the size of files for which a preview is allowed. Note that in 4.18+, previews of published files can also be embedded in the file page.

Simply turning everything back on, which would involve Dataverse retrieving the entire file from S3 one or more times, would be relatively simple though it would have performance impacts. It may make sense to add configuration options that would allow any of these steps to be turned on/off per store, or up to a given file size limit, etc. It would also be possible to shift more of this processing to the background (e.g. creating a .tab file is already done after the HTTP call to upload the file returns) although doing steps like unzipping this way would mean the Dataverse web interface could not show the list of files inside the zip during upload. More complex options, such as moving such processing to a machine local to the S3 store, are also possible (e.g. an app that would inspect the remote file and only send a new mimetype or extracted metadata to Dataverse instead of Dataverse having to pull the entire file from S3 itself.

Status 12/19: Still TBD depending on TDL requirements. (FWIW: One relatively simple option for ingest only would be to adopt the QDR changes that allow ingest to be done manually after upload.)

Status 1/3/20: Still tbd. As part of merging with the main Dataverse release, I’ll plan to add a size limit for each store, below which all ingest processing except unzipping will be done.

With the POC, an MD5 hash is created on the local machine as the file is streamed and this is sent to Dataverse to store as metadata (thus allowing the file contents to be compared with the original MD5 hash in the future to validate it’s integrity). Dataverse currently allows other algorithms (e.g. SHA-1, SHA-512). It should be possible to create an MD5 hash during upload through the Dataverse web interface as well. Allowing the hash algorithm to change would require adapting the DVUploader and new upload code for the Dataverse web interface to determine Dataverse’s selected algorithm and to generate the appropriate hash. (S3 does calculate a hash during upload as well, but it varies depending on whether the upload was done in multiple pieces. In theory, one could leverage that instead, but having a has from the original machine seems like a stronger approach.) Dataverse also allows you to change the hash algorithm used and to then update the hash for existing files. This requires retrieving the file and computing the hash locally, so it may be something that should not be done for large files/ for files in some stores, etc.

Status 12/19: TBD

Status 1/3/20: Implemented md5 hashing using a library that could create hashes using other algorithms.

Parallelism: The DVUploader currently sends one file at a time. Due to the way HTTP works, this may not use all of the available bandwidth. Sending multiple files in parallel would allow more bandwidth to be used. (Using more bandwidth for a single large file is harder and is one of the strengths of Globus/GridFTP). It would not be too much work to enhance the DVUploader to send several files at once. There might still be a bottleneck at Dataverse, where the api call is for a single file and results in an update in the database for the entire dataset. That database update has to complete before another api call can succeed. Adding an API call to allow multiple files to be added at once could be done to address that. It might also be possible to parallelize the upload in the web interface (it actually works more like this now as it streams all of the files up and then only updates the dataset in the database when you ‘save’ all the changes.) Whether these changes are worth the effort probably depends on the use cases and how much performance enhancement is gained from the direct S3 upload design itself.

Status 1/20: The web interface parallelizes upload (subject to the limits on connections managed by the browser).